Anúncios

Have you ever stopped to think about the risks of technology and how much we trust artificial intelligence without questioning it?

From movie and music recommendations to more serious decisions like medical diagnoses, credit analysis, and even court rulings, AI is already shaping our lives—often without us even noticing. But what if these systems, instead of helping, are actually making problems worse?

AI promises efficiency, accuracy, and impartiality. However, reality can be quite different. When trained with biased data, AI can discriminate against people, spread misinformation, and reinforce inequalities.

Even worse, these failures are not just technical errors but the result of rushed development, often without proper regulation.

The problem isn’t AI itself, but how it is being developed and used. If we don’t stop to reflect now, we might be creating a technology that, instead of solving challenges, only amplifies them.

The Rapid Growth of AI and Technological Risks

In recent years, artificial intelligence has evolved from a tool confined to research labs into an integral part of the global economy.

Advanced models are being applied in sectors such as healthcare, security, finance, and communication, playing crucial roles in critical decision-making. However, this rapid expansion also raises serious concerns.

Steven Adler, a former security researcher at OpenAI, argues that AI is growing without proper precautions and that its unrestricted application could have unpredictable consequences.

Companies like OpenAI, Google, and DeepSeek are at the forefront of this revolution, but the fast pace of innovation often outstrips the ability of oversight and regulation to keep up.

To closely follow the impacts and challenges of artificial intelligence, check out the full coverage here:

Key Risks of Rapidly Expanding AI:

- Spread of Misinformation: Models like DeepSeek can create highly convincing fake content, making it harder to distinguish between facts and manipulation

- Algorithmic Bias: When trained on biased data, AI can reinforce existing prejudices, perpetuating inequalities in areas such as credit, security, and hiring

- Lack of Regulation: The absence of clear guidelines allows companies to develop and implement technologies without holding themselves accountable for their social and ethical impacts

- Malicious Use of AI: Companies are already exploiting AI-based tools for digital fraud, deepfakes, and political manipulation, increasing cybersecurity challenges

Given these risks, it is crucial to analyze how AI can fail and what the consequences of these failures may be.

Risk Assessment in Artificial Intelligence Systems

Not all AI systems pose the same level of risk. Some applications, like virtual assistants and recommendation systems, have relatively low impact.

Others, such as automated medical diagnoses and credit approval algorithms, can have severe consequences if they fail. So how can we measure the risk of an AI system?

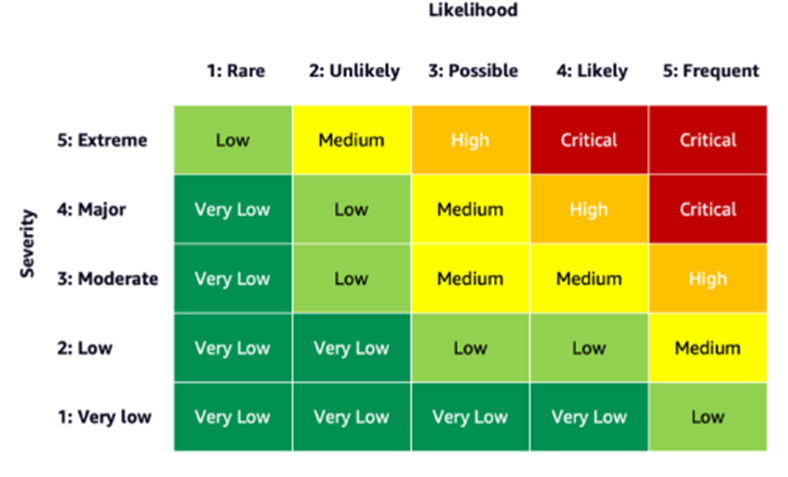

The AWS blog presents a structured approach to this analysis, proposing a Risk Matrix for AI Systems, which classifies risks based on two main factors: likelihood of failure and impact of that failure.

This matrix helps companies and developers identify which models require more oversight, auditing, and regulation before being deployed, minimizing the risks of technology in AI applications.

AI Risk Matrix: Classification and Impact

The image below illustrates how different AI systems can be categorized based on their risks.

Models with high error probability and high impact—such as those used in healthcare and criminal justice—require rigorous supervision and testing before large-scale implementation.

On the other hand, systems with low impact and low error probability may undergo a more flexible validation process.

The categorization presented by AWS highlights the importance of a balanced approach: overregulating low-risk systems may limit innovation, while failing to regulate high-risk models can have serious consequences for individuals and society.

Artificial Intelligence Requires Continuous Evaluation

AI is not static—it evolves constantly, and so do the risks of technology. As the AWS matrix shows, risk assessment should not be a one-time process but a continuous practice.

Companies, developers, and regulators must adopt this mindset to ensure they develop and use AI safely and ethically.

This requires not only rigorous testing before deployment but also continuous monitoring and adjustments as the technology expands into new areas of society.

By maintaining an ongoing evaluation process, we can ensure that AI serves humanity responsibly and does not become a source of harm or inequality.

Technological Failures: When Artificial Intelligence Makes Mistakes and Amplifies Problems

Although artificial intelligence systems are designed to optimize processes and offer innovative solutions, they can make significant errors in reality.

Many of these failures stem from structural issues in the data used to train the models, as well as the lack of proper oversight.

Examples of AI Failures:

Amazon’s AI and Gender Discrimination

Amazon developed an AI-based recruitment system, but internal testing revealed that the model discriminated against women.

The AI trained on historical hiring data, which was predominantly male, favored male resumes while penalizing female candidates.

The project eventually scrapped, but it exposed a critical issue: algorithms can learn and reinforce biases instead of correcting them

AI in Justice: Harsher Sentences for Black Defendants

In the United States, a software called COMPAS was used to predict the likelihood of criminal recidivism.

The system exhibited racial bias, classifying Black defendants as more likely to reoffend compared to White defendants, even when circumstances were similar.

This distortion led to harsher sentences for certain groups, exacerbating inequalities within the criminal justice system

For a deeper look at how AI can both reinforce and combat bias, check out the full article here:

DeepSeek and the Spread of Fake News

The Guardian reported that DeepSeek, an AI developed in China, could exploit large-scale misinformation. With a high level of sophistication, the system can manipulate elections, spread political propaganda, and create geopolitical disinformation.

The lack of transparency regarding the model’s operation and training raises concerns about its impact on democracy and public trust in digital information

These examples demonstrate that AI not only makes mistakes but can also amplify preexisting problems, worsening injustices and becoming a threat to society.

Is AI Companies’ Self-Regulation Enough?

Given the challenges posed by artificial intelligence, a fundamental question arises: can technology companies self-regulate and ensure that their systems are safe and ethical?

According to a Forbes report, experience shows that self-regulation is not effective. Tech companies often prioritize the development of innovative and profitable products, relegating security and ethical concerns to the background.

Problems with AI Industry Self-Regulation:

- Lack of Transparency: Companies often do not disclose details about algorithmic failures or biases.

- Conflict of Interest: Implementing safety controls can slow development and reduce corporate profits.

- Lack of External Oversight: Without government regulation, companies’ practices remain unchecked.

For example, OpenAI has faced criticism from former employees who warned that the company prioritized growth over safety. In some cases, these warnings were ignored, demonstrating that relying solely on corporate ethics can be a high-risk approach.

How to Reduce the Risks of Technology in AI?

Artificial intelligence is already part of our daily lives, and its influence will only continue to grow. Therefore, the central question is not whether we should use it, but how we can ensure its responsible and beneficial use.

Essential Measures to Minimize the Risks of Technology in AI:

- Global Regulation: Laws and guidelines must be established to ensure AI’s safe development.

- Independent Audits: Companies should be required to submit their models to external reviews to identify biases and failures.

- Increased Transparency: Organizations need to disclose data on errors, algorithmic biases, and corrective actions taken.

- Investment in Responsible AI: Research should focus on making artificial intelligence more ethical, safe, and impartial.

These actions are essential to ensure that AI contributes to societal progress without amplifying existing problems.

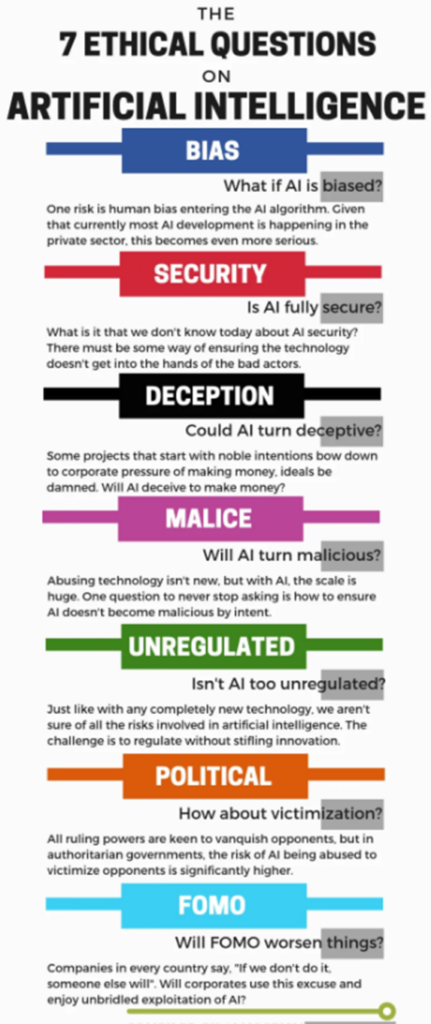

The Ethical Risks of AI: A Visual Overview

The advancement of artificial intelligence brings undeniable benefits, but it also raises ethical dilemmas that cannot be ignored.

Issues such as privacy, algorithmic discrimination, information manipulation, and labor market impacts are already among the challenges faced by businesses, governments, and society.

To better understand these risks, the infographic below clearly and objectively presents the main ethical challenges associated with AI use.

By viewing these issues in a structured way, it becomes clear that responsible technological development is essential.

Artificial intelligence can be a powerful ally, but without regulation and transparency, its impact can be harmful. We, as a society, must ensure that this technology is used to drive progress rather than amplify inequalities and threats to democracy.

We Need Reliable and Secure AI to Reduce the Risks of Technology.

The advancement of artificial intelligence presents immense opportunities but also brings complex challenges.

As evidenced by cases like Amazon’s AI, the COMPAS system, and DeepSeek, when poorly managed, this technology can amplify inequalities, spread misinformation, and compromise digital security.

Experts warn that regulation is essential to prevent companies from deploying AI models without considering their social and ethical implications.

Without transparency, oversight, and independent audits, artificial intelligence could become more of a threat than a benefit.

Technology should serve humanity, not the other way around. If we want a safe and fair future, we must act now.

References

Forbes. (2024, January 25). Trustworthy AI: String of AI fails show self-regulation doesn’t work. Forbes.

Forbes. (2023, April 29). Artificial intelligence may amplify bias, but fights it as well. Forbes.

DataDrivenInvestor. (n.d.). What are the ethical risks we run with AI? (Infographic). Medium.

AWS. (n.d.). Learn how to assess risk of AI systems. AWS Machine Learning Blog.

The Guardian. (2025, January 29). DeepSeek advances could heighten safety risk, says ‘godfather’ of AI. The Guardian.

The Guardian. (2025, January 28). Experts urge caution over use of Chinese AI DeepSeek. The Guardian.

The Guardian. (2025, January 28). Former OpenAI safety researcher brands pace of AI development ‘terrifying’. The Guardian.